Varjo & Unity: Dynamic Human-Eye Resolution Photogrammetry

If you want to show realistic and life-like environments in virtual reality, the easiest way is to do a photogrammetry scan from a real-world location and show it as a digital twin in VR. Varjo created a photogrammetry scene with Unity using dynamic lighting to better simulate real-world environments and show the potential of photogrammetry for professional use.

Photogrammetry for VR: Making of the Koyasan Okunoin Cemetery demo

Photogrammetry is a method for scanning real-life objects or spaces into the 3D format with a digital camera. In photogrammetry, you take a lot of pictures of the capture target and generate a 3D scene from the photographs.

With Varjo, exploring the finest details of buildings, construction sites or other spaces is for the first time possible in human-eye resolution VR. 20/20 resolution expands the use cases of photogrammetry VR for industrial use.

To illustrate the potential of dynamic, human-eye resolution VR photogrammetry, we at Varjo created a dynamic, Made with Unity demo of one of Japan’s holiest places, the Okunoin cemetery at Mount Koya. In this article, we explain how it was done.

“Exploring the finest details in spaces expands the use cases of photogrammetry VR for industrial use.”

Capturing the photogrammetry location

Photogrammetry starts with choosing the proper capture location or target object. Not all places or objects are suitable for photogrammetry capture. We chose to do a capture from an old cemetery in Mount Koya in Japan, because we wanted to do something culturally significant in addition to having lots of details to explore in the demo. Since this was an outdoor capture, the conditions were very challenging to control. But here at Varjo we like challenges.

The key challenges in this capture were:

1. Movement. The Okunoin cemetery at Koyasan is big and ancient. There were surprisingly many tourists visiting it every day, and a camera on tripod was a real people magnet. But when doing photogrammetry, the scene you’re capturing should be completely still and static without anything moving around. This can be problematic if you are capturing anything large because if the object itself is not moving, maybe the light source, the sun, is moving. If the shoot takes a few hours, the shadows may change a lot.

2. Weather. When you do outdoor capture, it should be overcast weather. It of course cannot rain during the capture nor before the capture. Wet surfaces have a different look than dry ones, and the scene should look the same throughout the shoot.

3. Ground. The cemetery floor in the chosen location was very difficult to capture, as it was filled with short pine branches and twigs that moved when walking around them.

When taking the photos of the photogrammetry scene, a general rule is that each picture should overlap with the neighboring picture at least 30% or more. The main goal is to take photos of the target from as many angles as possible and keep the images overlapping.

The area captured in Koyasan was scanned in similar fashion than if one would scan a room. For this scene, about 2,500 photographs were taken.

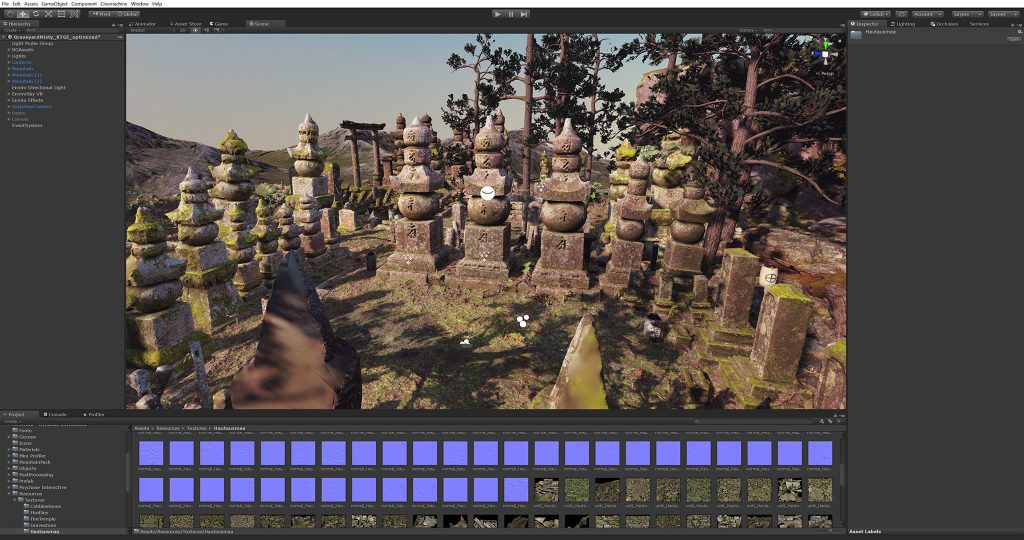

Building the dynamic 3D scene with Unity

Photogrammetry delivers realistic immersion but often its static lighting narrows down the realistic use cases. We wanted to use dynamic lighting to simulate a realistic environment. Unity provides a great platform for constructing and rendering of highly detailed scenes, which made it easy to automate the workflow.

We also used the excellent Delighting tool and the Unity Asset Store to aid us fill the gaps when needed. Some trees and stones from Unity’s fantastic Book Of The Dead assets were also used.

While shooting the site, file transfers were constantly made so we could save time in the 3D construction. First, we used a software called Reality Capture to create a 3D scene of the photographs.

Mesh Processing and UVs

The 3D scene was exported from Reality Capture with single 10 million polygon mesh with a set of 98 x 8k textures.

In Houdini, the mesh was run through Voronoi Fracture that splits the meshes into smaller and more manageable sized pieces. Different levels of LODs were then generated with shared UVs. This was done to avoid texture popping between LOD levels.

That way, the textures were small enough for Unity to chew and we could get the Umbra occlusion culling working. It was also lighter to generate UVs when the pieces were smaller.

Shader was created to bake different textures. Unity’s de-lighting tool requires at least albedo, ambient occlusion, normal, bent normal and position map. Most frame buffers are straight-forward to bake out of the box but bent normals are not so obvious. Luckily bent normals are basically direction of missed occlusion rays, and there is a simple VEX function called occlusion() that basically outputs bent normals.

Delighting

We created a Python script to automatically run the textures through the batch script provided by the Unity delighting tool.

If the scan has a lot of color variation, the delighting has trouble estimating the environment probe. Therefore, we decided on a mixed approach where we mixed between automatic delighting and traditional image-based lighting shadow removal.

A Unity Asset Post processor script was made to import the processed models. It handled the material creation and texture assignment.

A total of 128 of 4k textures were processed, baked and delighted.

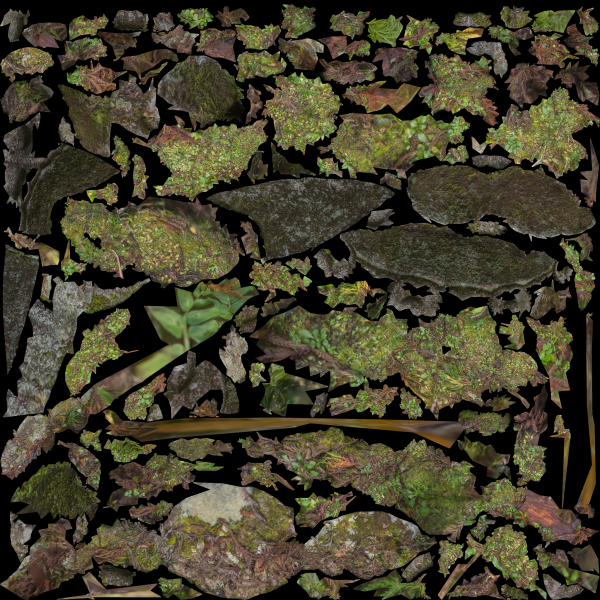

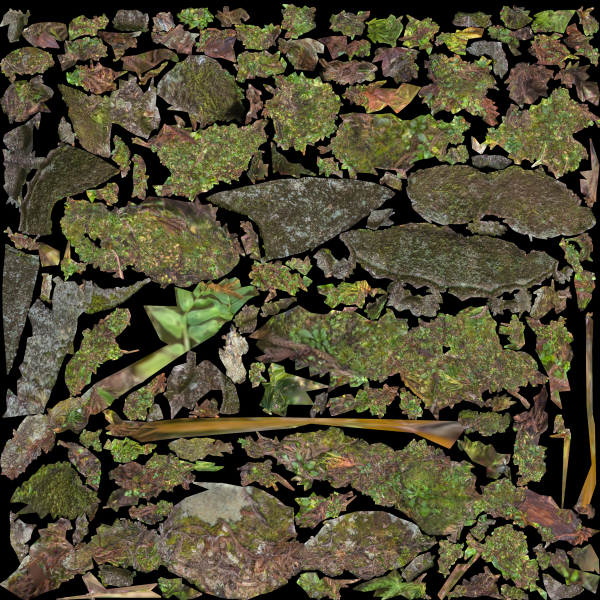

Before & after delighting

Varjo & Unity – Easy integration

Once the scene was imported, it was just a matter of dragging the VarjoUser prefab to the scene. Instantly, the scene was viewable with the Varjo headset, and we could start tweaking it to match our needs.

The Unity asset Enviro was used for the daylight-night cycle, and the real- time global illumination was baked to the scene. The generated mesh UVs were used for the global illumination to avoid long preprocessing times. The settings were set so light mapper would do minimal work on the UVs. This can be done by enabling uv optimization in the meshes and adjusting settings.