Our eye is like a narrow beam scanner which the brain uses to collect information to build an understanding about the world around us. Our weakest link is the peripheral vision, for those who haven’t noticed – we see extremely poorly at the corner of our eyes. But at the centre of the field of view, the image our eyes see is crystal clear.

The resolution of Varjo’s headset is equivalent to 20/20 vision in the human eye. What does it mean?

Imagine standing in front of Snellen chart and seeing small E letters as clearly as possible – both in VR and in real world from 20-foot distance. There is no difference between the VR and the real world. Period.

Another way to describe the accuracy of human vision is to define how many pixels per degree (of field of view) the eye can resolve. According to Steve Jobs, 300 pixels per inch is enough for a normal person if the display is viewed from a distance of 10”–12” from the eye. Commonly this is translated to 57 pixels per degree.

Why resolution is the key for virtual reality

In Varjo’s VR headsets, the highest resolution at the center of the display is reaching 64 pixels per degree, which is about 20 times higher compared to current headsets on the market. Bionic Display™ can portray the smallest of details, and this has incredible value for professional applications.

One of the key requirements for virtual reality is the ability to read. Not just text, but instruments and labels. Typically, people do not pay much attention to the text and symbols around them, but in VR it’s crucial. How can you design a realistic car dashboard if all the text and symbols appear as a pixelated mess? How can you teach pilots or astronauts if the text on buttons cannot be read? How can you train nuclear power plant operators to deal with critical situations requiring visual acuity?

Comparison shots

Varjo enables all these scenarios. If text is readable in a given size and contrast in real life, it is also readable in VR. Hence, this single feature makes the simulation in VR reach a whole new scale.

Another key use case for human-eye resolution virtual reality is architecture and industrial design. Designers put hours and hours into examining different surface materials and structures. In the current VR-based architecture demos, you can comprehend the place and proportions, but the lack of details can be frustrating.

With Varjo, you can see clearly what the difference between knitted fabric or printed fabric is. You can explain to your client the merits of premium materials over low-cost materials, or compare the impact of glossiness to surface appearance.

How it all started

At the beginning of Varjo’s journey, we focused on developing a mixed reality device that could work with existing VR headsets, while providing low-latency, high-resolution, video pass-through-based mixed reality.

Very soon we discovered that cameras were not the weakest link in a video see-through system. With cameras, we could reach good-quality capturing of the real world as long as we could get a low latency streaming video processing pipeline from some of the image signal processor (ISP) developers.

The bigger challenge was, none of the available headsets had the resolution and quality needed for a feasible mixed reality device. The majority of headset makers focused on reducing price rather than improving visual quality.

The lack of quality led us to reconsider our initial approach and pushed us to build focus on making our own headset. Sure enough, that was a rather bold statement for a company of four people in 2016, but we were certain that we could provide something fundamentally better.

Towards human-eye resolution virtual reality – Two displays per eye

Back in 2016, our founding team did some estimations of what would be needed to get to the level that would allow video to seriously challenge optical see-through XR. Our thinking was, if we can reach a quarter of human-eye resolution virtual reality, i.e. 30 pixels/degree, it would be a good enough solution for most mixed reality use cases. Back then, typical commercial headsets were barely reaching 10 pixels/degree, so the needed jump in resolution was enormous.

At first, we studied the possibility to rely on the display development to reach a sufficient quality level. The sad outcome was that there was no such natural development curve in display technology that would have allowed 30 pixels/degree to be reached, unless we would reduce the field of view considerably. That did not feel like the right compromise. There was also the performance challenge of driving such a high-resolution display and rendering content.

I don’t recall who of the founders originally got the idea of using two displays per one eye inside the headset, but once that idea was said out loud, it felt like the right thing to do.

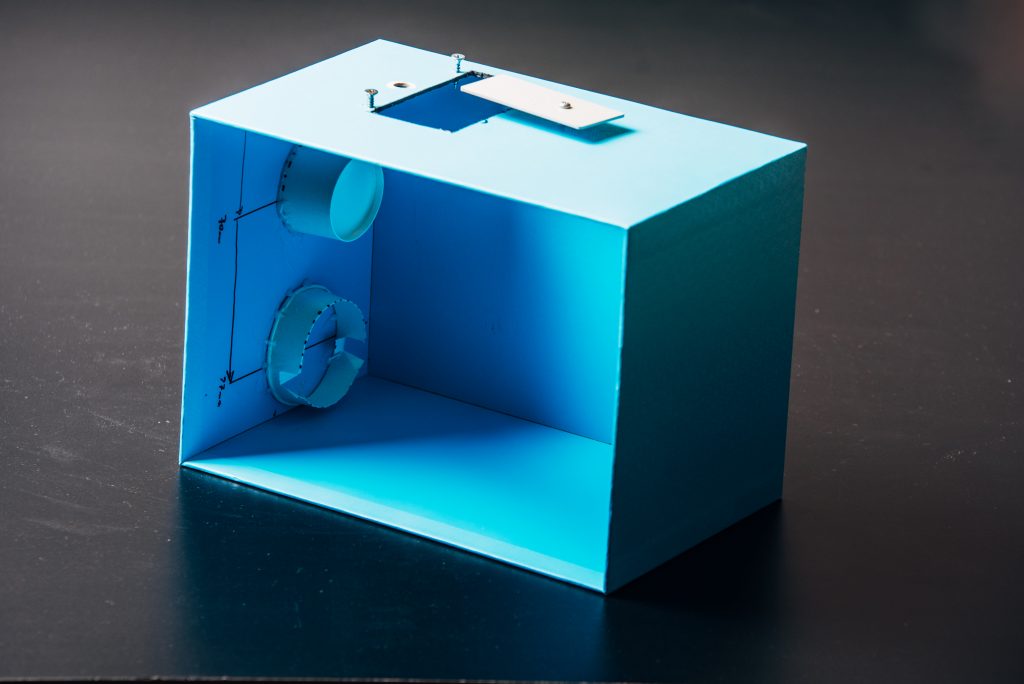

The first prototype that was literally a card board box including two pictures, an optical combiner and two controllable light sources to illuminate the pictures. This crude proto was pivotal, since it undeniably proved that our approach was feasible and sound.

From prototyping to product

The trick with the Bionic Display™ is that it optically combines two displays together. One display in the peripheral area provides the wide field of view. Another display, with our language; focus display, covers only a small area and hence has a much higher pixel density. Images of these two displays are then combined using a semi-transparent mirror, i.e. beam combiner. The end result is an image that is both wide field of view but is also high in details.

A lot of prototyping was needed to bring our pivotal idea into reality. Our first table-top protos were used to demonstrate that the principle of merging two images and driving four displays concurrently works.

A key breakthrough was when we got new, larger micro-OLEDs and put a magnification lens on top of it. At that point, we had a prototype with a sufficiently large high-density area while reaching a resolution beyond that of the human eye. These bigger OLEDs reached such a fidelity that you didn’t have to be the proud parent of this invention to notice that there is some magical going on. The image quality was miles above the competition.

If two displays were just combined optically, distortions from the optics, assembly accuracies, color balance, and practically every aspect of the perceived image quality would be off. That is why software innovation is equally important in creating a high-resolution image for the Bionic Display™.

With the help of the huge capacity of the modern GPU, we can compensate things and by our automated production line calibration, we have reached the high fidelity for each individual product we manufacture.