Real-Life XR Use Cases and the Technologies Behind Them

Descriptions of mixed reality technology might sometimes sound like they came from the pen of a science fiction writer. This is a misconception. While there certainly are futuristic and impressive use cases still to be discovered, mixed reality is science fiction no longer. XR is already here and is being used by companies the world over to design, build, and train better than ever.

In this article, we go through some of the core technologies used by Varjo and how companies and organizations are already using them on a daily basis.

Mixed Reality Masking

There are different methods to create mixed reality experience with Varjo. The first method is called mixed reality masking. When developing for Varjo headsets, you can use any virtual object in your scene (plane, cube or complex mesh) to act as a “window” into the real world. This is especially useful if you want to bring real-world elements, such as instruments or controller devices into the virtual environment through one or many “windows”.

A practical example of this are flight simulation use cases such as cockpit simulators where you want to display a virtual flight scenario outside the cockpit, but show the cockpit and the physical controls to the user.

Examples of already implemented use cases:

Chroma Keying

A second method to create a mixed reality experience with Varjo is Chroma keying. Chroma keying, also known as “green screening” or “blue screening,” is a technique used to superimpose one image or video over another by removing a specific color, usually green or blue, from the background of the top layer. This allows the background to be replaced with a different image or video, creating the illusion that the subject in the top layer is in a different location or environment.

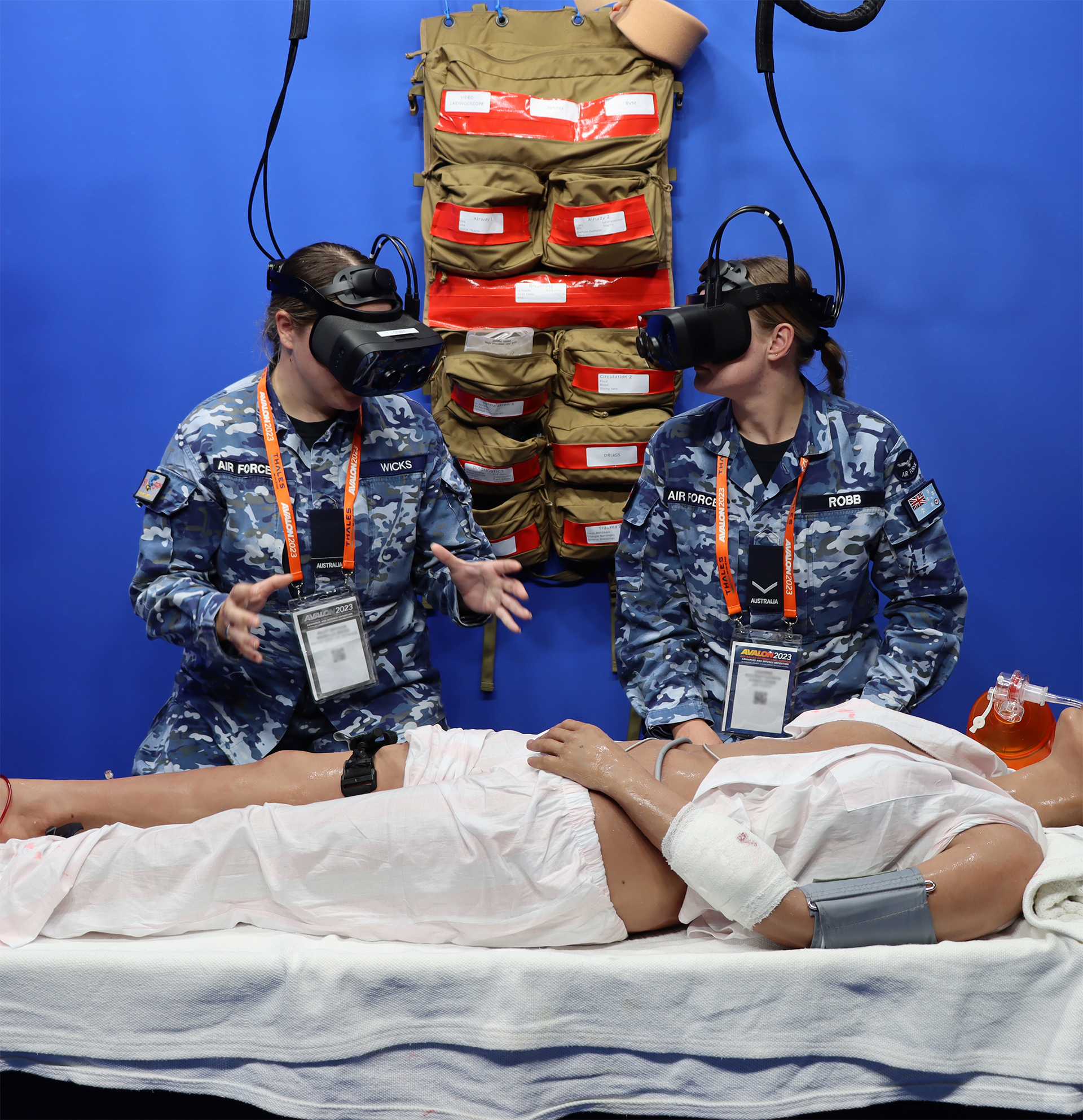

Chroma keying enables very high precision occlusion of virtual objects by real objects. Best-fit solutions for chroma keying are ones where it’s important for the user to accurately see real objects. In practice, this means use cases where a user needs to see a handheld tool, see other real people or see objects that are not in mixed reality but overlaid atop the virtual scenario.

Chroma keying can convert any VR application that runs through the Varjo headset to be a mixed reality application without writing single line of code or having access to the source code of the application. Practical applications of chroma keying done with Varjo include different kinds of racing setups, flight and driving simulation, mixed reality training rooms, and much more.

Examples of already implemented use cases:

Mixed reality post-process shaders

As Varjo has a video pass-through mixed reality headset, it is also possible to adjust the video feed with different kinds of “filters” without latency in real time. This enables use cases such as simulation of night vision or of different kinds of medical conditions that affect a person’s sight. These adjustments can be extremely useful for training and medical research purposes, for example.

Night vision representation can be very useful for training military pilots for night operations. Just like other mixed reality flight simulation use cases, it allows them to practice for a mission before stepping in an actual plane and get a more realistic feeling for how flying at night differs from daylight conditions.

An example application from the medical field is from Roche and Lucid Reality Labs. In this application, the companies used a Varjo headset and its eye tracking and shader capabilities to simulate what living with macular disease, a degenerative eye disorder, is like. As the user moves their gaze, the video pass-through accurately simulates dark spots and tears in a person’s visual field, demonstrating how living with this disease hinders a person’s everyday life. This way, the experience makes it easier to relate to patients’ problems and helps raise awareness for the disease.

Examples of already implemented use cases:

Real-time reflections in mixed reality

Varjo has the capability to use the video pass-through camera feed to create lighting conditions and reflections that match those in your current real-world location. This is extremely useful when blending virtual content with the real world, making the scene appear more natural and realistic.

Real-time reflections are useful for any scenario where the goal is to deepen the immersion and realism as much as possible. One practical application of this are design use cases, such as automotive or interior design, where digital objects should match their real world equivalents as accurately as possible. For example, using real-time reflections can give the designer a much better sense of how reflective materials would behave in the real world.

Examples of already implemented use cases:

Hand Tracking

Hand tracking helps you operate in virtual and mixed reality environments and interact with them without separate controllers. With hand tracking, you use your hands to control the virtual environment just as you would interact with the physical world. You can push buttons, move objects, operate on a virtual patient while holding virtual surgical tools, and much much more.

Mixed reality applications using hand tracking can easily combine the use of both physical and virtual objects. For example, a pilot trainee can use physical controls of a cockpit simulator while also interacting with digital instruments that you don’t have in the physical cockpit.

The biggest benefit of hand tracking is that it is highly intuitive. With hand tracking, anyone can engage with virtual or mixed reality contents immediately – even if they have no experience with XR or VR. This also helps make the application much more immersive.

Examples of already implemented use cases:

Eye Tracking

Eye tracking allows recording and studying eye movements in virtual and mixed reality applications. Varjo headsets feature the fastest and most accurate integrated eye tracking at 200 Hz that is capable of capturing even the most minute eye movements like saccade velocities and fixation duration with research-grade accuracy and precision.

Eye tracking is used widely in different kinds of research and training applications because it enables the trainer or researcher to see precisely where the headset user is looking.

For example, MVRsimulation uses it in pilot simulator training to show the trainer a highly accurate visual representation of where the trainee is looking. With this kind of information, pilot trainees can learn the correct behaviors much faster, without any guesswork involved.

Foveated Rendering

The goal of foveated rendering is to give users the best visual quality possible in areas they are actually gazing at while using the least amount of computational resources. It is a rendering technique that uses an eye tracker integrated into a headset to reduce the image quality of the content being rendered in the user’s peripheral vision.

Using foveated rendering is beneficial because lowering the image quality in the user’s peripheral vision improves system performance as approximately 30-50% fewer pixels need to be rendered. Using it makes sense because a person can see with the highest resolution only at the center of the eye’s field of view. Rendering things in full resolution in a person’s peripheral vision is essentially wasted because the eye cannot see the full detail.

Using foveated rendering allows applications to have the best possible visual quality with less computation capability, which means hardware requirements are reduced. All Varjo VR and XR headsets have foveated rendering capabilities. However, the feature only works if it is also supported by the application being used.

Multiapp

Did you know that you can already run multiple Varjo applications simultaneously? The image the user sees can be constructed using more than one application. These applications can be made with different engines or image generators.

For example, you can use a specific image generator (IG) to render terrain while using a Unity or Unreal app for rendering the virtual parts of the cockpit, add hand-tracking capability, or implement mission selection.

You can use the multiapp feature also for a second application without rendering anything on the headset. For example, the second app can access eye-tracking data while the first application is used to render visuals.

Interested to learn more about what’s possible with Varjo mixed reality?