Dynamic Foveated Variable Shader Rate with the OpenXR Toolkit and Varjo Aero (a.k.a. Foveated Rendering)

Overview

Human eyes have a unique design with two kinds of photosensors, the rod and the cones. Cones are very accurate and detect fine details. Rods are much more sensitive to light than cones, but blurrier. Cones and Rods are distributed very non-uniformly. Cones are concentrated within a few degrees of field of view around the gaze. There are no rods in the center of the FOV, but they are covering most of the eye field of view. There is even a blind spot where the nerve is attached, an entire area of the eye that has no photosensors at all. https://foundationsofvision.stanford.edu/chapter-3-the-photoreceptor-mosaic/

The human brain is doing some magic given all these constraints and forming a clear picture. For instance, we do not notice a blind spot. We can capture high resolution only at the center of the eye’s field of view, and we can barely detect colors in the periphery. So as a far as head-mounted displays (HMDs), if we know exactly where the eye is looking at, we could omit rendering completely in the blind spot area, we could lower the image resolution in the periphery, we could probably render in black and white in the outer periphery and none of this would be noticeable to the person in the headset. This would potentially save a lot of rendering resources.

There are many ways to go about taking advantage of this phenomenon, most of them based on using less resolution in the periphery than in the center, maybe also using different levels of details or texture resolutions, also possibly using different antialiasing techniques. But it is not that simple as the eye and brain image formation is a complex machinery, that is not based on pixels. But even if we had figured out how to best take advantage of this, there is the problem of latency. The eyes can move very fast. By the time the rendering engine has calculated the image and it is displayed, the eyes may be looking somewhere else, and the performance optimization trickery will be invariably noticed.

OpenXR – VARJO_quad_views and VARJO_foveated_rendering

OpenXR is a royalty-free cross-platform standard that has been designed by numerous companies including HTC, Microsoft, Meta, Valve, Varjo, NVIDIA, and AMD collaborating in order to simplify application developer life. The standard has been available on pretty much all the VR/AR devices for some time now, and naturally more and more applications are using this interface to the HMDs. Microsoft Flight Simulator is using OpenXR. https://www.khronos.org/registry/OpenXR/specs/1.0/html/xrspec.html

OpenXR is composed of core features, multi-vendor extensions, and vendor-specific extensions. One of those vendors-specific extensions is VARJO_quad_views, available on Varjo HMDs as the name of the extension indicates. By default, in OpenXR, an application will generate two images, one for each eye. This is known as stereo views. But one unique capability of Varjo HMDs (except the Aero), is to have two displays per eye, allowing human eye resolution in the center area of the screen. The purpose of VARJO_quad_views is to enable the application to generate one view covering the entire field of view at the resolution of the context screen, and one inner view to match with the resolution and field of view of the focus screen (padded for taking into account the head movement and latency).

The VARJO_foveated_rendering extension is augmenting the VARJO_quad_views extension by adding eye tracking. The idea is that every rendered frame the application will be provided a different position of the inner view based on where the eyes are looking at. But also, since we know where the eyes are looking, the size of the inner view is much reduced from the default quad_views. And then the resolution of the outer view can also be reduced, as there is no need to even calculate as many pixels as the context screen can display, because even though it is not as high resolution as the focus screen, it is still higher resolution than the eye can detect at its periphery.

Effectively the foveated rendering extension has decoupled the required rendering resolution based on eye tracking and perceived resolution, from the resolution of the screens. Therefore, the same feature can also be applied on Aero, as the outer view resolution required with eye tracking is lower resolution than the Aero display.

This is great, but unfortunately, this is very demanding on the application side. Applications not only have to render two images to be compatible with VR but now must render four images now to be able to take advantage of quad views foveated rendering. This is often not a concern for enterprise applications that are designed to fit perfectly Varjo, but it is a lot to ask for an application that has to be compatible with a lot of HMDs, and may not have the luxury to update their rendering engine to generate additional views. Specifically generating multiple views is costly in terms of triangle processing, as a naïve implementation would multiply the number of rendered triangles, which could be more resource-intensive than the game in performance in rendering. But there are specific GPU optimizations, such as simultaneous multi-projection, that should be used to gain the full benefits.

Variable Rate Shading (VRS)

NVIDIA has added dedicated hardware in the Turing generation GPUs (2080s) that can parametrize at which resolution the shaders will be run. Usually, each displayed pixel runs its own shader, to calculate its final color. But VRS can set the GPU to only run a shader for a group of pixels. The application can provide a matrix that provides a shader rate for each 16×16 pixels region. https://developer.nvidia.com/vrworks/graphics/variablerateshading

Unlike the QUAD view extension, the rendering resolution is not affected by this method. The same number of pixels still needs to be calculated and stored. The edges of the polygons are still rendered at high resolution. But the GPU can still save a lot of time by calculating the same shader for a group of pixels, effectively changing the resolution at which the color information is calculated. The performance benefits of VRS may not be as good as with a deep integration of quad view, but the huge benefit is that apart from providing a shader rate coefficient matrix, the application does not have to be modified to take advantage of this.

OpenXR Toolkit as an OpenXR API layer

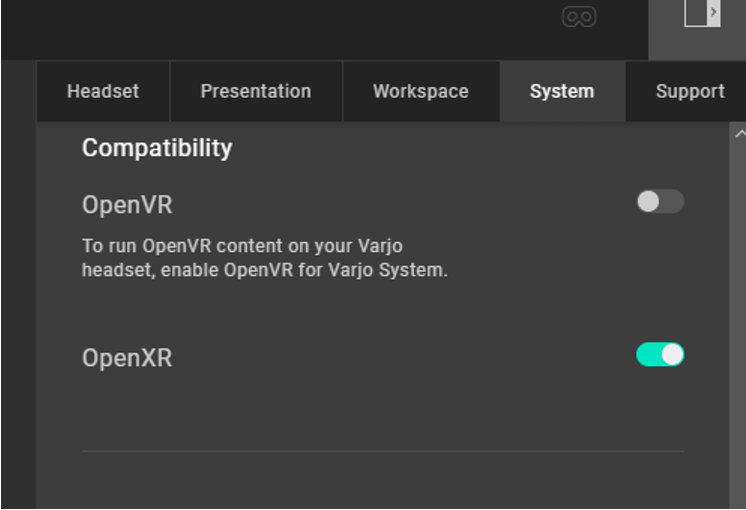

One of the major advantages of OpenXR is to enable the end-user to decide which OpenXR runtime to use. It is quite unique to OpenXR, the application writer has to make sure that it works with OpenXR, and the same executable can load an OpenXR runtime as set up by the end-user and run. This allows applications to work on HMDs that don’t even exist at the time the application has been developed. This allows the end-user to decide what hardware to use without having to get a new executable. There is usually one runtime per each hardware vendor, but there are also runtimes that can support more than one HMD by way of plugin within the runtime. This is the case for Varjo, where the end-user can select the Varjo OpenXR runtime (recommended) by enabling it in Varjo Base. But it is also possible to run an OpenXR application with SteamVR OpenXR runtime, which then connects to the OpenVR plugin to the Varjo compositor. This shows how open is the OpenXR standard. Also, this is something to pay attention to, as SteamVR OpenXR does not implement Varjo extensions, so it is sometimes important to make sure the proper run-time is in use, by checking the setting in Varjo Base.

Note that this can also be done by setting a key in the Windows registry: Computer\HKEY_LOCAL_MACHINE\SOFTWARE\Khronos\OpenXR\1\ActiveRuntime points to a JSON file that contains the location of the OpenXR runtime (i.e. C:\Program Files\Varjo\varjo-openxr\VarjoOpenXR.json).

Another standard built-in mechanism of the OpenXR loader is the capability to insert one or several API layers in front of the runtime. This is effectively allowing a third party to intercept or augment the interface between the application and the OpenXR runtime. One practical application is to provide a debug layer that analyses all the calls and helps debug issues. It also is used by vendors to augment existing runtime with additional extensions, such as hand tracking. It’s very convenient as it enables an end-user to add hardware peripherals and the corresponding software interface. This also is done by editing the Windows registry.

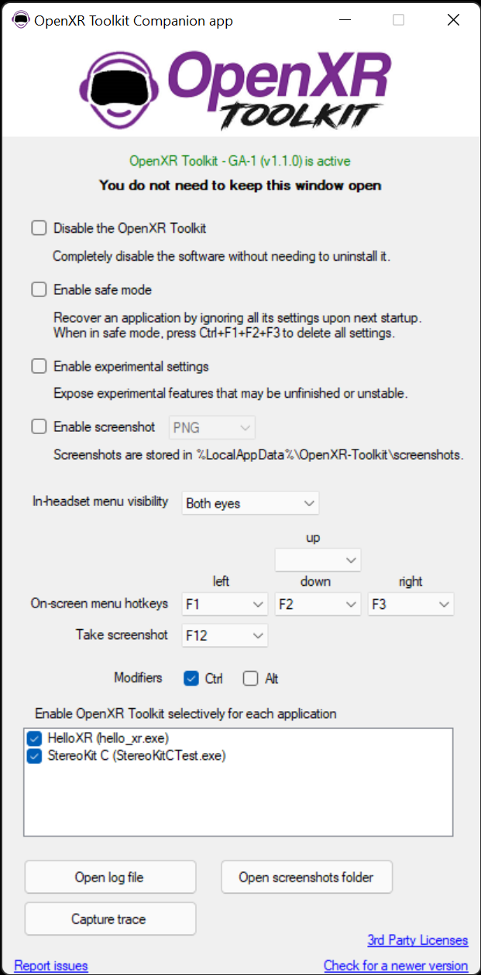

The OpenXR Toolkit (https://mbucchia.github.io/OpenXR-Toolkit/) is an open-source project offering an extensive collection of utilities. It is an OpenXR API layer that is interposing between the host application and the OpenXR runtime and it provides a set of features designed to augment the VR experience for the end-users. This includes performance-oriented capabilities such as resolution upscaling with AMD FidelityFX™ Super Resolution (FSR) and many tweaks to image quality such as sharpening and color control to only list a few.

The OpenXR Toolkit comes with an installer that properly inserts the correct information in the registry to add itself as an API layer automatically. The installer also takes care to insert the layer at the right position when it detects the other layers are already installed. Finally, it creates a shortcut in the Start Menu to the Companion application that provides access to some basic parameters and can even disable the API layer without uninstalling it.

The rest of the settings are accessible via an on-screen menu displayed in the headset within each application (shown further below).

The OpenXR Toolkit and Foveated Rendering

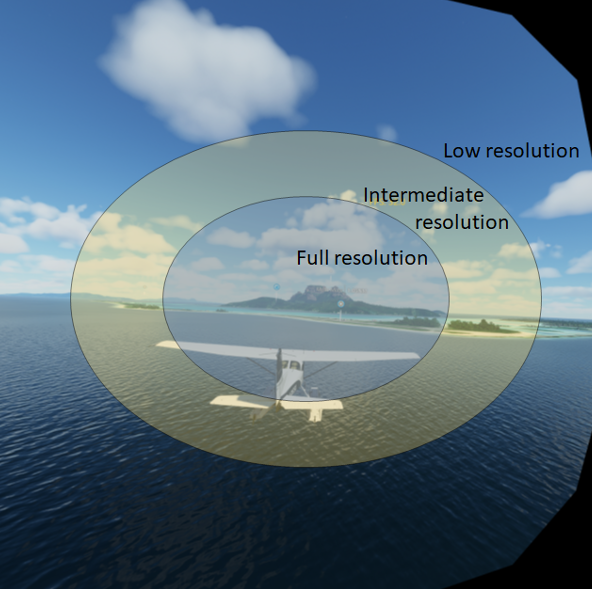

The OpenXR Toolkit offers Fixed Foveated Rendering (FFR), a feature with which each view is divided into 3 regions, and each region is assigned a different Variable Shading Rate. A typical use case is to keep the highest shading rates in the center, where the VR headset lenses are often best calibrated while lowering the shading rates in the peripheral vision areas, where human vision cannot discern details. This approach effectively frees some of the GPU resources by reallocating them where it matters the most visually. Provided the user is moving their head to make sure the important part of the image is in the center of the screen, they will always look at the region with the best clarity (full resolution), and the overall performance is improved due to the reduced quality in the periphery.

This works quite well for many VR applications because VR users are often used to rotating their heads to where they want to see more clearly, instead of just moving their eyes, especially because prior Varjo Aero, no headset was offering aspherical lenses and edge-to-edge clarity.

However, it must be noted that anything that creates habits that are specific to using VR can also induce negative training. The Varjo Aero headset includes a professional eye tracker and implements the OpenXR EXT_eye_gaze_interaction extension, which enables the application to query the eye gaze. And so with this information, it should be possible to align the area of high resolution with where the user is looking at.

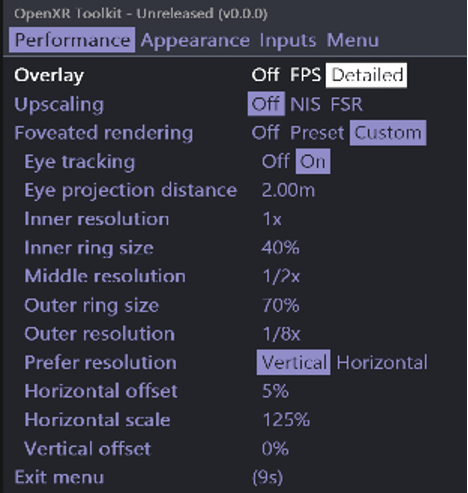

A new feature was recently added to the OpenXR Toolkit: Dynamic Foveated Rendering (DFR). In essence, it is combining the Variable Rate Shading (VRS) support natively supported with Direct3D 12 Ultimate (and Direct3D 11 with Nvidia), with the eye tracker found in the VR headset. For these applications which are not leveraging the VR native eye tracking device yet, DFR allows to move the high-resolution area to where the user is actually looking at. Furthermore, it is also opening the way to get even more performance benefits, because the full resolution area only needs to be as large as a few degrees wide while still providing the same perceived quality for the end-user, thanks to the way the human vision is working.

How it is done

The first step performed during the rendering process is for the OpenXR Toolkit to detect when the application is about to render an image to the headset. This can be a bit more complicated than it sounds: the OpenXR standard defines how an application should present the rendered images to the headset, however it does not dictate how the application should perform its rendering. This allows a wide variety of rendering techniques, from the very basic single-pass forward rendering (where the image for each eye is drawn at once directly onto the output surface) to complex multi-pass rendering with deferred shading (where a scene is “painted” in multiple steps into several intermediate surfaces, and the left/right eyes are possibly alternated during the process). The OpenXR Toolkit is using the Detours library (Detours – Microsoft Research) to intercept key function calls between the application and the Direct3D API. A simple filter analyses these calls and tries to pick up these “render targets” the application is using which could classify as actual render views, based on their types and sizes. A second filter stage consists in determining whether these selected render targets are destined for the left or the right eye, using simple heuristics which are presuming, for example, that the application would always render the left eye first.

Once the OpenXR Toolkit knows that the application is about to draw a scene, it can now query the eye tracker. This is made very easy with the OpenXR API: an eye tracker is just another device returning a “pose”, which is made of a position and a rotation (quaternion). This is the same principle used for other input devices, such as a VR controller. As a matter of fact, the initial implementation of eye tracking in the OpenXR Toolkit was completely done using a VR controller to simulate the eye tracker input, and it only takes to switch 2 function calls to go from the controller to the eye tracker! The eye tracker is providing a pose that represents the eye gaze (the direction where the user is looking) into the 3D space. But this 3D eye gaze is designed to implement things like user interaction and is not directly usable for foveated rendering. The next step is to project the 3D gaze ray originating from the eyes onto a virtual 2D screen corresponding to the display panel inside your HMD. These calculations are done for each eye, using the corresponding eye position and projection matrix, and produce 2D screen coordinates for each eye. These coordinates correspond to the center of the foveated regions, meaning the center of the area where we want to maximize clarity by using the highest possible shading rate. Note that in the case of multi-pass rendering, the eye tracker is only queried once (upon the first render target that successfully passes the tests described earlier) and the projected screen coordinates are latched for future passes until a new frame begins.

With the 2D screen coordinates corresponding to the eye gaze, the OpenXR Toolkit can now build the input necessary for Variable Rate Shading. At the GPU level, the VRS requires only 2 sets of data: a table of rates and a texture. The former tells which rates to use and the latter tells which of these rates are being used over a specific region of the render target. To this effect, the VRS texture divides the render target in regions of fixed tile sizes, typically 8×8 or 16×16, where each of the VRS Texel is set to a VRS rate index. The GPU then looks up the index and picks up the corresponding shading rate from the table. The OpenXR Toolkit leverages this mechanism in the following way: because it must support D3D12 which doesn’t use a separable lookup table, and because DFR requires following the eye gaze direction, which is potentially changing every frame, it sets the VRS rate table only once during first initialization (D3D11 only), and it updates the VRS texture every frame. Once the resources necessary for Variable Rate Shading are ready, the OpenXR Toolkit can now program the GPU to perform the shading and give control back to the application to perform its rendering adequately.

One key performance enabler for this process is the GPU Compute Shader, which is effectively making the entire process of drawing up to 4 elliptical regions of shading rates per VRS texture, one each per eye, in a few microseconds only. Since the entire process resides in the GPU, this process is not adding any latency due to CPU/GPU synchronization, and it is effectively capable of refreshing the VRS texture as fast as the application is capable of refreshing the render views so that the user won’t be experiencing any lag when looking around.

Special thanks to Micah Frisby for his support during the development of the feature, letting us try new code on his Varjo Aero headset, and providing very thorough feedback during every experiment.